PostgreSQL’s rise: one database to rule them all (with DMAP AI as the accelerator)

Postgres wins because its core is extensible (custom data types, index AMs, background workers, FDWs) and predictable (MVCC, WAL, ACID). That lets it shape-shift into many roles without leaving SQL.

Below, each role is broken down into: Capabilities → Design/Indexes → Ops/Tuning → Example. At the end, you’ll find how DMAP AI maps Oracle workloads into the right Postgres patterns automatically.

Get start for free – Book a DMAP AI demo: Book a Call – Newt Global Consulting LLC

1. Time-series database

Capabilities

-

High-ingest writes with COPY, batched inserts, UNLOGGED staging tables.

-

Native partitioning (range/hash) + BRIN indexes for append-only time data.

-

TimescaleDB adds hypertables, automatic chunking, compression (columnar), continuous aggregates.

Design / Indexes

-

Partition by time (e.g., daily/week) and optionally by device/customer.

-

Use BRIN for wide time ranges and GIN on JSONB tags.

-

Prefer UPSERT with ON CONFLICT for idempotent pipelines.

Ops / Tuning

-

synchronous_commit=offfor non-critical ingest pipelines. -

Enable Timescale compression after data cools; set retention policies.

-

Vacuum tuned for high insert/update workloads (

autovacuum_vacuum_scale_factor,autovacuum_analyze_scale_factor).

Example

-- Timescale hypertable

CREATE TABLE metrics(ts timestamptz NOT NULL, device_id int, val double precision, tags jsonb);

SELECT create_hypertable('metrics', 'ts', chunk_time_interval => interval '1 day');

-- BRIN on time, GIN on tags

CREATE INDEX ON metrics USING brin (ts);

CREATE INDEX ON metrics USING gin (tags);

-- Continuous aggregate (5-min rollup)

CREATE MATERIALIZED VIEW metrics_5m

WITH (timescaledb.continuous)

AS SELECT time_bucket('5 min', ts) AS bucket, device_id, avg(val) AS avg_val

FROM metrics GROUP BY 1,2;

2. Geospatial analytics (PostGIS)

Capabilities

-

geometry/geography types, 900+ functions (e.g.,

ST_Intersects,ST_Distance,ST_ClusterKMeans). -

GiST/SP-GiST for R-tree-like indexing; KNN nearest-neighbor with

ORDER BY geom <-> point.

Design / Indexes

-

Normalize SRIDs; use geography for Earth-scale meters, geometry for planar precision.

-

Store boundaries as polygons; points as

POINT; roads asLINESTRING.

Ops / Tuning

-

Analyze geometry columns; ensure selective bounding boxes before expensive predicates.

-

For tiled workloads, pre-cache vector tiles via postgis_tiger_geocoder or external tile cache.

Example

3. Operational OLTP

Capabilities

-

MVCC: readers don’t block writers; visibility managed via hint/visibility maps.

-

WAL + streaming replication: durability + HA; PITR with base backups + WAL archiving.

-

Rich indexing: btree, hash, GiST, SP-GiST, GIN, BRIN, plus partial/expression indexes.

Design / Indexes

-

Model with strict constraints (FKs, CHECKs) to enforce integrity.

-

Use partial indexes for hot subsets; generated columns for computed search keys.

Ops / Tuning

-

Connection pooler (pgBouncer) for microservices.

-

Tune

work_mem,shared_buffers,effective_cache_size. -

Monitor with pg_stat_statements / pg_stat_monitor, enable auto_explain.

Example

4. Analytics / HTAP

Capabilities

-

Parallel query, JIT (LLVM), bitmap scans, hash/merge joins.

-

Foreign Data Wrappers (S3/csv/JDBC) for federation.

-

Columnar options (e.g., citus-columnar) and materialized views.

Design / Indexes

-

Star schema for BI; partition fact tables by date.

-

Use incremental refresh of materialized rollups or Timescale continuous aggs.

Ops / Tuning

-

Increase

work_memfor heavy sorts/aggregates; consider temp tablespaces on fast disk. -

Schedule

REFRESH MATERIALIZED VIEW CONCURRENTLY.

Example

5. Message broker (LISTEN/NOTIFY, pgmq, outbox)

Capabilities

-

LISTEN/NOTIFY for pub-sub; payload up to 8000 bytes.

-

Durable queues with pgmq (SQS-like semantics) or a simple outbox table + workers.

-

Advisory locks for work distribution.

Design / Indexes

-

Outbox pattern: commit event and state change atomically.

-

Use SKIP LOCKED for concurrent workers.

Ops / Tuning

-

Keep NOTIFY volume moderate; for high throughput use pgmq/outbox.

-

Debezium can stream the outbox to Kafka without dual-write.

Example

6. Vector database for AI (pgvector)

Capabilities

-

vectordata type; distances: cosine, L2, inner product. -

Indexes: IVFFLAT (requires training), HNSW (graph-based ANN).

-

Re-ranking with SQL + model scores; hybrid search combining full-text (tsvector) + vector.

Design / Indexes

-

Store metadata alongside embeddings; add GIN on JSONB/tags or FTS.

-

For IVFFLAT choose

lists~ sqrt(N); for HNSW tunem,ef_search.

Ops / Tuning

-

Pin index in memory if possible; use ANALYZE after bulk loads.

-

Batch upserts; rebuild IVFFLAT after large data shifts.

Example

7. Graph database (native + extensions)

Capabilities

-

Recursive CTEs for traversals (trees/graphs).

-

AgensGraph extension adds labeled property graphs + OpenCypher.

Design / Indexes

-

Adjacency tables

(src, dst, weight)with btree indexes. -

Materialize heavy traversals into path tables or use AgensGraph for complex pattern matching.

Ops / Tuning

-

Cap recursion depth; ensure indexes on

(src)and(dst)for fast joins.

Example (recursive CTE)

8. Distributed Postgres (Citus / logical)

Capabilities

-

Citus: coordinator + workers, shard by distribution column; colocation for fast multi-table joins; reference tables for small dimensions.

-

Logical replication for multi-region/active-active patterns; pglogical/BDR options exist.

Design / Indexes

-

Pick a distribution key with high cardinality and locality (e.g., tenant_id).

-

Reference tables broadcast to all shards; ensure compatible primary keys.

Ops / Tuning

-

Online rebalance/reshard; shard count typically 32–128 for large clusters.

-

max_parallel_workers_per_gatherand Citus placement policies impact fan-out performance.

Example (Citus)

Cross-cutting: reliability, security, observability

-

HA: streaming replication + Patroni/repmgr; synchronous standbys for zero-RPO.

-

Backups: base backups + WAL archiving; PITR to any timestamp.

-

Security: roles/privs, RLS for multitenancy, pgaudit for traceability, TLS everywhere.

-

Observability:

pg_stat_statements,pg_stat_kcache,pg_wait_sampling; export to Prometheus/Grafana.

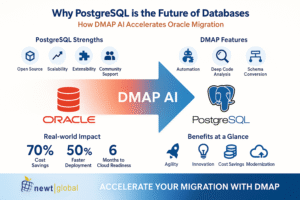

DMAP AI: the Oracle → PostgreSQL accelerator

Enterprises love Postgres but fear migrations. DMAP AI (Newt Global) uses static analysis + AI translation + automated testing to convert Oracle estates into first-class PostgreSQL systems—and pick the right extensions (Timescale, PostGIS, pgvector, Citus) based on workload.

A. End-to-end pipeline

-

Inventory & Telemetry

-

Parse Oracle DDL, dependencies, AWR/ASH, SQL plan baselines.

-

Classify workloads: OLTP, spatial, time-series, analytics, messaging, AI, graph.

-

Schema & Type Mapping

-

NUMBER(p,s)→NUMERIC(p,s)/BIGINT;DATE→timestamp;CLOB→text;RAW→bytea. -

Tablespaces → Postgres tables + tablespaces; Sequences →

GENERATED BY DEFAULT AS IDENTITY.

-

Code Translation (PL/SQL → PL/pgSQL)

-

Packages/procedures/functions/triggers converted;

%TYPE/%ROWTYPE,EXCEPTIONblocks handled. -

CONNECT BY→WITH RECURSIVE;MERGE→ native PostgresMERGE. -

DBMS_SCHEDULER→ cron/pg_cron;DBMS_JOB→ pg_jobs implementation. -

Advanced Queuing → outbox/pgmq/NOTIFY;

SYS_REFCURSOR→REFCURSOR/set-returning functions.

-

Feature Remapping

-

Spatial: SDO_GEOMETRY → PostGIS; rebuild spatial indexes (GiST).

-

Time-series: interval partitioned tables → Timescale hypertables.

-

Text/JSON: Oracle Text → Postgres FTS (

tsvector) + GIN. -

Analytics: materialized views →

MATERIALIZED VIEW [CONCURRENTLY]; star schemas + Citus if needed. -

AI: semantic features → pgvector; DMAP proposes dimensionality & distance metric.

-

Performance & Plan Alignment

-

Rewrite anti-patterns: implicit conversions,

NVL→COALESCE, function-on-column to computed columns + indexes. -

Hint translation: Oracle hints → planner settings, index strategies, or Citus colocations.

-

Index advisor suggests btree/GIN/GiST/BRIN based on predicate selectivity.

-

Data Move & CDC

-

Bulk load via

COPYor parallel loaders; validate row counts + checksums. -

Zero-downtime cutover with logical replication or Debezium CDC; switch with minimal RPO/RTO.

-

Automated Testing

-

Golden tests: same inputs, identical outputs between Oracle and Postgres.

-

Query-level diff on result sets, row-count diffs, perf thresholds; CI-style reports.

-

Hardening & SRE

-

HA topology (Patroni), PITR retention, pgaudit policies, RLS templates for multitenant apps.

-

Observability dashboards; slow-query budgets enforced via

pg_stat_statements.

B. Example mappings (snippets)

C. How DMAP AI picks the right Postgres “persona”

-

High ingest + time windows detected → Recommend Timescale (hypertables, compression, continuous aggs).

-

Spatial types/functions spotted → Map to PostGIS and rebuild GiST indexes.

-

LLM/vector queries or cosine similarity observed → Introduce pgvector with HNSW, tune

ef_search. -

Tenant key & cross-tenant joins → Propose Citus with colocation or reference tables.

-

AQ/queue usage → Convert to outbox + worker or pgmq.

D. Cutover blueprint (zero-downtime)

-

Provision Postgres/Citus/Timescale per DMAP plan

-

Bulk load + build indexes/materialized views

-

Start CDC; validate deltas until drift ~0

-

Shadow-read in production → dual-write if needed → switch DNS/app conn

-

Freeze Oracle; finalize CDC; PITR snapshot; decommission

Putting it together: a real-world reference stack

-

Core: PostgreSQL 16, Patroni HA, pgBouncer pooling

-

Extensions: Timescale (metrics), PostGIS (maps), pgvector (semantic search), Citus (sharding)

-

Pipelines: Outbox + Debezium to Kafka; S3 FDW for data lake access

-

Observability:

pg_stat_statements, Prometheus, Grafana;auto_explainin prod-safe mode -

Security: TLS, SCRAM, RLS for tenants,

pgaudit -

Ops: WAL archiving to object storage; PITR scripts; chaos drills quarterly

-

Migration: DMAP AI for translation, testing, CDC, and optimization guidance

Bottom line

-

Postgres delivers time-series, geospatial, OLTP, OLAP, messaging, vector, graph, and distributed in one consistent platform with first-class extensions.

-

DMAP AI removes Oracle lock-in with automated translation, performance tuning, CDC cutover, and workload-aware recommendations—so enterprises can standardize on Postgres without risk.

Get start for free – Book a DMAP AI demo: Book a Call – Newt Global Consulting LLC